Data assessment is the process of evaluating the quality and suitability of data for a specific purpose. It involves identifying and addressing any potential issues with the data, such as missing values, incorrect data types, or inconsistencies. Data assessment can be performed manually or using automated tools.

Many different factors can be considered when assessing data quality, such as:

- Accuracy: The extent to which the data is correct and free of errors.

- Completeness: The extent to which the data is complete and includes all relevant information.

- Relevance: The extent to which the data is relevant to the intended purpose.

- Timeliness: The extent to which the data is up-to-date.

- Consistency: The extent to which the data is consistent within itself and with other data sources.

Data assessment is an important part of any data-driven project. By ensuring that the data is of high quality, you can improve the accuracy and reliability of your results.

Benefits of data assessment:

There are many benefits to data assessment, including:

- Improved decision-making: Data assessment can help you to identify trends and patterns in your data, which can then be used to make better decisions.

- Increased efficiency: Data assessment can help you to identify areas where your data is inefficient, such as by identifying duplicate records or missing data.

- Reduced risk: Data assessment can help you to identify potential risks in your data, such as data that is out of date or inaccurate.

Methods for data assessment:

Many different methods can be used to assess data quality. Some common methods include:

- Data profiling: Data profiling is the process of collecting and analyzing data about the data itself. This can include information such as the number of records, the data types, and the distribution of values.

- Data sampling: Data sampling is the process of selecting a subset of data for analysis. This can be used to get a representative sample of the data and to identify any potential issues.

- Data quality rules: Data quality rules are a set of criteria that can be used to assess the quality of data. These rules can be used to identify missing values, incorrect data types, and inconsistencies.

- Data quality metrics: Data quality metrics are a set of measurements that can be used to quantify the quality of data. These metrics can be used to track the improvement of data quality over time.

The best method for assessing data quality will vary depending on the specific data set and the intended purpose of the assessment. However, all of the methods mentioned above can be used to improve the quality of your data and to make better decisions based on it.

Data profiling:

Data profiling is the process of collecting and analyzing data about the data itself. This can include information such as the number of records, the data types, the distribution of values, and the presence of missing values or outliers. Data profiling can be used to identify potential issues with the data, such as data that is incomplete, inaccurate, or inconsistent.

Here are some of the benefits of data profiling:

- Identifying potential issues: Data profiling can help you to identify potential issues with your data, such as missing values, incorrect data types, and inconsistencies. This can help you to take steps to correct these issues before they impact your data analysis.

- Improving data quality: Data profiling can help you to improve the quality of your data by identifying and correcting potential issues. This can lead to more accurate and reliable results from your data analysis.

- Making better decisions: By understanding the quality of your data, you can make better decisions about how to use it. For example, if you know that your data is incomplete, you may need to collect additional data before you can make a decision.

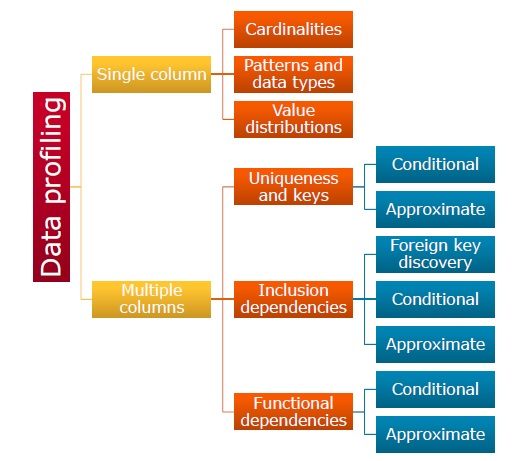

Here is an image that illustrates the data profiling process:

The data profiling process typically involves the following steps:

- Data collection: The first step is to collect the data that you want to profile. This data can come from a variety of sources, such as databases, spreadsheets, and text files.

- Data cleaning: Once you have collected your data, you need to clean it to remove any errors or inconsistencies. This can involve tasks such as removing duplicate records, correcting typos, and filling in missing values.

- Data analysis: Once your data has been cleaned, you can begin to analyze it. This can involve tasks such as identifying the data types, calculating summary statistics, and identifying patterns and trends.

- Reporting: The final step is to report your findings. This can be done in a variety of ways, such as creating a report, giving a presentation, or publishing a paper.

Data profiling is an important part of the data analysis process. By profiling your data, you can identify potential issues, improve the quality of your data, and make better decisions.

Data sampling:

Data sampling is the process of selecting a subset of data from a larger population for analysis. This can be done to save time and resources, or to improve the accuracy of the analysis by reducing the impact of outliers.

There are two main types of data sampling: random sampling and stratified sampling.

- Random sampling involves randomly selecting data points from the population. This is the simplest type of sampling, but it can be less accurate if the population is not evenly distributed.

- Stratified sampling involves dividing the population into groups, or strata, and then randomly selecting data points from each group. This is more accurate than random sampling, but it can be more time-consuming.

Here are some of the benefits of data sampling:

- Reduced time and resources: Data sampling can save time and resources by reducing the amount of data that needs to be analyzed.

- Improved accuracy: Data sampling can improve the accuracy of the analysis by reducing the impact of outliers.

- Increased flexibility: Data sampling can be used to analyze data that is too large or too complex to analyze using traditional methods.

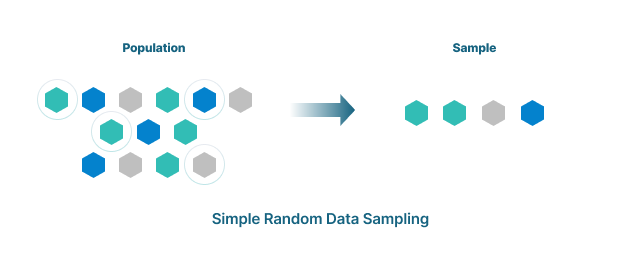

Here is an image that illustrates the data sampling process:

The data sampling process typically involves the following steps:

- Define the population: The first step is to define the population that you want to sample. This population can be anything from a group of people to a collection of data points.

- Determine the sample size: The next step is to determine the sample size. This is the number of data points that you want to sample. The sample size will depend on the size of the population, the level of accuracy that you need, and the resources that you have available.

- Select the sample: The final step is to select the sample. This can be done using random sampling or stratified sampling.

Data sampling is a powerful tool that can be used to improve the efficiency and accuracy of data analysis. By understanding the different types of data sampling and the factors that affect the accuracy of the results, you can use data sampling to get the most out of your data.

Data quality rules:

Data quality rules are a set of criteria that can be used to assess the quality of data. These rules can be used to identify missing values, incorrect data types, and inconsistencies.

There are two main types of data quality rules: business rules and technical rules.

- Business rules are rules that are specific to the business domain. These rules define the requirements for the data, such as what values are allowed, what lengths are required, and what formats are acceptable.

- Technical rules are rules that are specific to the data format. These rules define the technical requirements for the data, such as the data types, the lengths, and the formats.

Here are some of the benefits of using data quality rules:

- Improved data quality: Data quality rules can help to improve the quality of data by identifying and correcting potential issues. This can lead to more accurate and reliable results from data analysis.

- Reduced risk: Data quality rules can help to reduce the risk of errors and inconsistencies in data analysis. This can help to protect the organization from financial losses, legal liability, and reputational damage.

- Increased efficiency: Data quality rules can help to increase the efficiency of data analysis by automating the process of identifying and correcting potential issues. This can free up time for analysts to focus on other tasks, such as developing insights and making recommendations.

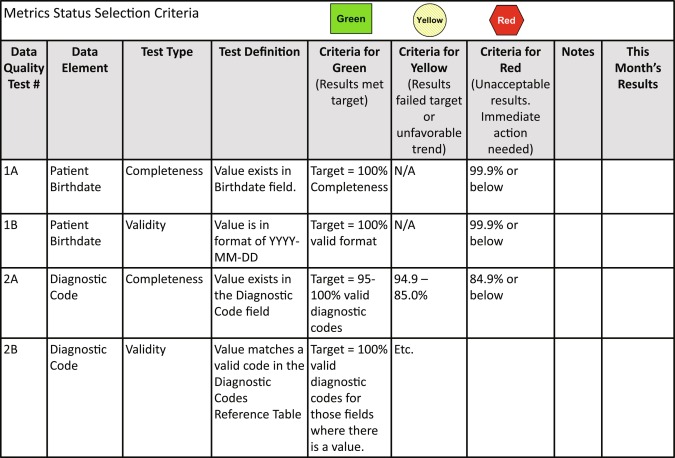

Here is an image that illustrates the data quality rules process:

The data quality rules process typically involves the following steps:

- Identify the business rules: The first step is to identify the business rules that apply to the data. These rules can be obtained from business stakeholders, such as data stewards and business analysts.

- Identify the technical rules: The next step is to identify the technical rules that apply to the data. These rules can be obtained from data engineers and database administrators.

- Create data quality rules: The final step is to create data quality rules that enforce the business and technical rules. These rules can be implemented in a variety of ways, such as using data profiling tools, data quality management tools, or custom code.

Data quality rules are an important part of any data-driven organization. By implementing data quality rules, organizations can improve the quality of their data, reduce the risk of errors and inconsistencies, and increase the efficiency of data analysis.

Here are some additional things to keep in mind when creating data quality rules:

- The rules must be specific: The rules must be specific enough to identify potential issues. For example, a rule that states “the customer name must be a valid name” is too vague. A better rule would be “the customer name must be at least 3 characters long and no more than 100 characters long.”

- The rules must be accurate: The rules must be accurate. This means that they must accurately reflect the business requirements and the technical requirements for the data.

- The rules must be complete: The rules must be complete. This means that they must cover all of the potential issues that could affect the data.

- The rules must be easy to understand: The rules must be easy to understand. This means that they must be written in plain language and avoid technical jargon.

Data quality metrics:

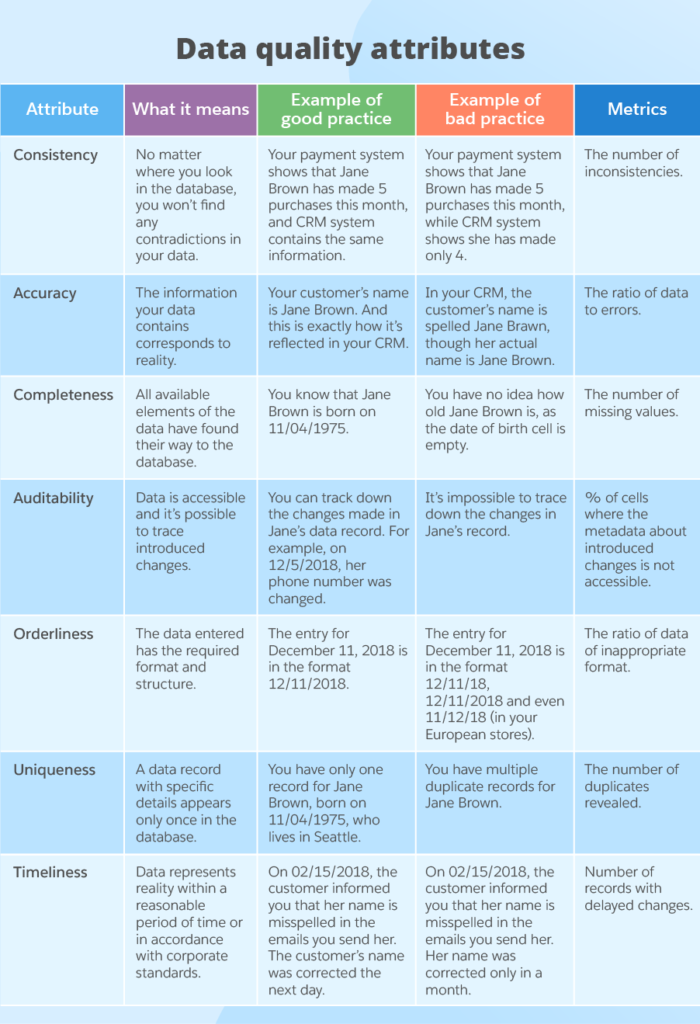

Data quality metrics are a set of measurements that can be used to quantify the quality of data. These metrics can be used to track the improvement of data quality over time.

Here are some of the most common data quality metrics:

- Accuracy: The accuracy of data is the extent to which it is correct and free of errors. This can be measured by calculating the percentage of data points that are accurate.

- Completeness: The completeness of data is the extent to which it includes all relevant information. This can be measured by calculating the percentage of data points that are complete.

- Relevance: The relevance of data is the extent to which it is useful for the intended purpose. This can be measured by asking users to rate the usefulness of the data.

- Timeliness: The timeliness of data is the extent to which it is up-to-date. This can be measured by calculating the average age of the data.

- Consistency: The consistency of data is the extent to which it is internally consistent and consistent with other data sources. This can be measured by calculating the number of inconsistencies in the data.

Here are some of the benefits of using data quality metrics:

- Improved data quality: Data quality metrics can help to improve the quality of data by identifying and correcting potential issues. This can lead to more accurate and reliable results from data analysis.

- Reduced risk: Data quality metrics can help to reduce the risk of errors and inconsistencies in data analysis. This can help to protect the organization from financial losses, legal liability, and reputational damage.

- Increased efficiency: Data quality metrics can help to increase the efficiency of data analysis by automating the process of identifying and correcting potential issues. This can free up time for analysts to focus on other tasks, such as developing insights and making recommendations.

Here is an image that illustrates the data quality metrics process:

The data quality metrics process typically involves the following steps:

- Identify the data quality metrics: The first step is to identify the data quality metrics that are important for the organization. These metrics can be obtained from business stakeholders, such as data stewards and business analysts.

- Collect data quality metrics: The next step is to collect data quality metrics. This can be done manually or using automated tools.

- Analyze data quality metrics: The final step is to analyze data quality metrics. This can be done using statistical tools or by creating dashboards and reports.

Data quality metrics are an important part of any data-driven organization. By collecting and analyzing data quality metrics, organizations can improve the quality of their data, reduce the risk of errors and inconsistencies, and increase the efficiency of data analysis.

Here are some additional things to keep in mind when using data quality metrics:

- The metrics must be relevant: The metrics must be relevant to the organization’s goals and objectives.

- The metrics must be accurate: The metrics must be accurate. This means that they must be calculated correctly and that the data used to calculate them must be accurate.

- The metrics must be timely: The metrics must be timely. This means that they must be updated regularly.

- The metrics must be communicated: The metrics must be communicated to the relevant stakeholders. This will help to ensure that everyone is aware of the data quality and that any potential issues are addressed.

Why data assessment is important in data cleansing?

Data assessment is the process of evaluating the quality and suitability of data for a specific purpose. It involves identifying and addressing any potential issues with the data, such as missing values, incorrect data types, or inconsistencies. Data assessment can be performed manually or using automated tools.

5 steps of data assessment process simplified

Data assessment is important in the data cleansing process because it helps to ensure that the data is of high quality before it is cleansed. This is important because data cleansing can be a time-consuming and expensive process, and it is not effective if the data is not of high quality to begin with.

Here are some of the reasons why data assessment is important in the data cleansing process:

- Identifying potential issues: Data assessment can help to identify potential issues with the data, such as missing values, incorrect data types, or inconsistencies. This can help to prevent these issues from affecting the data cleansing process and ensuring that the data is cleaned correctly.

- Prioritizing data cleansing tasks: Data assessment can help to prioritize data cleansing tasks. By identifying the most important data issues, data cleansers can focus their efforts on cleaning the most critical data first.

- Measuring the success of data cleansing: Data assessment can be used to measure the success of data cleansing. By comparing the data before and after cleansing, data cleansers can determine how effective the cleansing process was and identify any areas that need further improvement.

Overall, data assessment is an important part of the data cleansing process. By identifying potential issues early on and prioritizing data cleansing tasks, data assessment can help to make the data cleansing process more efficient and effective.

Conclusion:

Data assessment is an important part of any data-driven project. By ensuring that the data is of high quality, you can improve the accuracy and reliability of your results.